Building a Clean, Scalable Quant Research Pipeline in Python

Quantitative trading thrives on two things: sound domain logic and solid engineering discipline. A strategy may look strong in theory, but without reproducible pipelines, reliable data flows, and testable models, it becomes impossible to scale.

Why Engineering Discipline Matters in Quant

Markets reward consistency. A strategy must:

- Use trustworthy, well-validated data

- Avoid lookahead bias

- Be tested end-to-end

- Produce reproducible results

- Handle data at scale (minute bars, tick data, multi-asset universes)

Good engineering practices—clean code, modularity, CI/CD, versioning—are what allow a quant team to grow from experiments to real capital deployment.

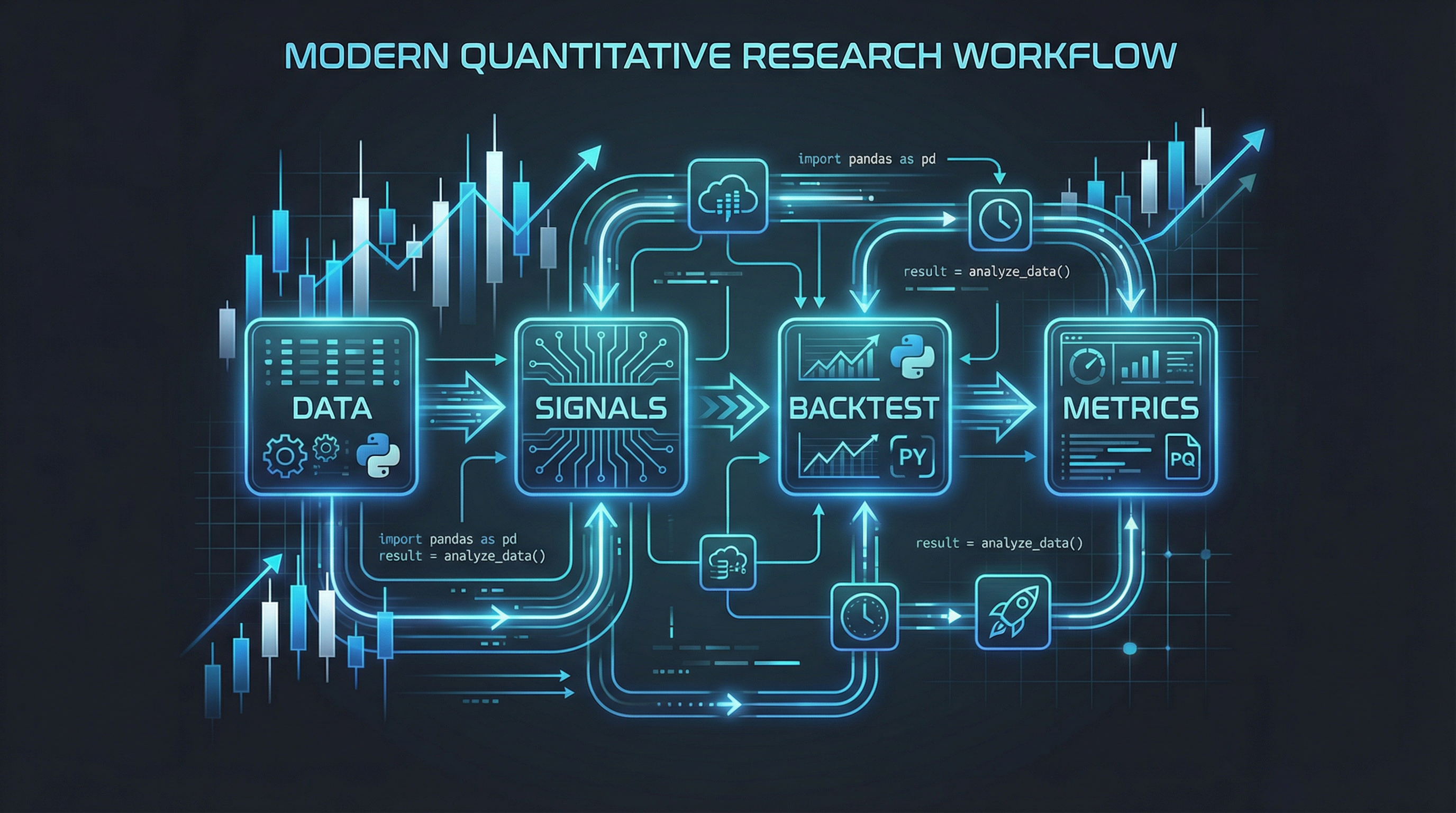

The Backbone of Any Research Workflow

A clear project layout avoids chaos when strategies multiply:

quant_project/

│

├─ data/ # Raw & processed files (CSV, Parquet)

├─ notebooks/ # Idea exploration

├─ src/

│ ├─ quant/

│ │ ├─ data.py # Data loaders, validators, resamplers

│ │ ├─ strategy.py # Signal generation

│ │ ├─ backtest.py # Vectorized backtester

│ │ ├─ costs.py # Fees & slippage models

│ │ ├─ risk.py # Position sizing & controls

│ │ └─ metrics.py # Performance analytics

└─ tests/ # pytest unit testsSeparate ingestion, transformation, business logic, and testing.

From Raw Data to Research-Ready Features

Quant workflows depend on clean, validated data. Engineering principles help:

1. Use schema validation

- Ensure datetime, OHLCV columns, and frequency integrity

- Enforce sorted timestamps

- Catch duplicated or missing rows early

2. Store data in Parquet

- Compressed

- Columnar

- Fast to query

- Ideal as your research layer on top of raw vendor files

3. Decouple ingestion from research

Your data loader becomes a stable service-like module—not something embedded in notebooks.

Strategy Logic: Simple, Clean, Testable

As a starting point, consider a Simple Moving Average (SMA) crossover:

def sma_signal(df, fast=20, slow=50):

fast_sma = df["close"].rolling(fast).mean()

slow_sma = df["close"].rolling(slow).mean()

signal = (fast_sma > slow_sma).astype(int)

return signal.shift(1).fillna(0) # avoid lookaheadThe critical engineering detail is the shift(1): it ensures the signal only uses past information.

Vectorized Backtesting: Fast, Deterministic, Reproducible

A vectorized backtester avoids event loops and improves clarity:

- Compute trades via

position.diff() - Calculate execution price with slippage adjustments

- Track cash & portfolio value deterministically

- No hidden state or side effects

total = shares * df["close"] + cash

returns = total.pct_change().fillna(0)Deterministic pipelines are essential for peer review and CI/CD.

Transaction Costs & Market Reality

Realistic execution modeling includes:

- Fixed per-share fees

- Slippage (spread or %-based)

- Partial fills for large orders

- Volume-based constraints

A clean costs.py keeps the model modular and reusable across strategies.

Risk Management: The True Alpha Protector

Good quants engineer constraints, not just ideas. Examples:

- Maximum position size per symbol

- Daily max loss

- Portfolio exposure caps

- Volatility-targeted sizing (ATR or GARCH-based)

- Stop-loss / profit lock mechanisms

Separating these rules into a risk.py module ensures clarity and testability.

Metrics: Engineering + Finance in Harmony

The performance layer blends quant analytics and reproducible computation:

- Annualized return

- Annualized volatility

- Sharpe & Sortino

- Maximum drawdown

- Trade expectancy

- Turnover

- Exposure over time

These metrics become automated checks in CI pipelines, preventing regressions.

Testing: The Hidden Power Tool in Quant

Institutions rely heavily on pytest-driven validation:

- Validate no lookahead usage

- Ensure all timestamps are strictly increasing

- Test strategy outputs on synthetic data

- Ensure slippage/costs are applied correctly

- Regression tests for strategy performance

Good quants treat test coverage like capital preservation: non-negotiable.

Scaling to Multi-Asset Universes

Once the pipeline works for one asset, scaling becomes an engineering problem:

- Use wide DataFrames (assets as columns)

- Apply vectorized signals

- Allocate capital across symbols

- Use task orchestrators (Airflow/Dagster) for daily data pulls

- Store results in Postgres or DuckDB for dashboards

Your research system becomes a full data platform.

Deployment: From Notebook to Production

A production trader needs:

- Dockerized strategy & pipeline

- Nightly batch jobs pulling fresh data

- Real-time account reconciliation

- Live trading connectors (IB, Alpaca, CCXT)

- Logging, monitoring & alerts

- GitHub Actions running tests on every commit

Clean engineering is the difference between a hobby and a desk-level system.